Churn Prediction

AGUNG HANDAYANTO

Summary

The data used for this modeling comes from kaggle.com. This model is used to predict customers who may switch to other competitors, using the decision tree classification algorithm with an accuracy of 70%.

Description

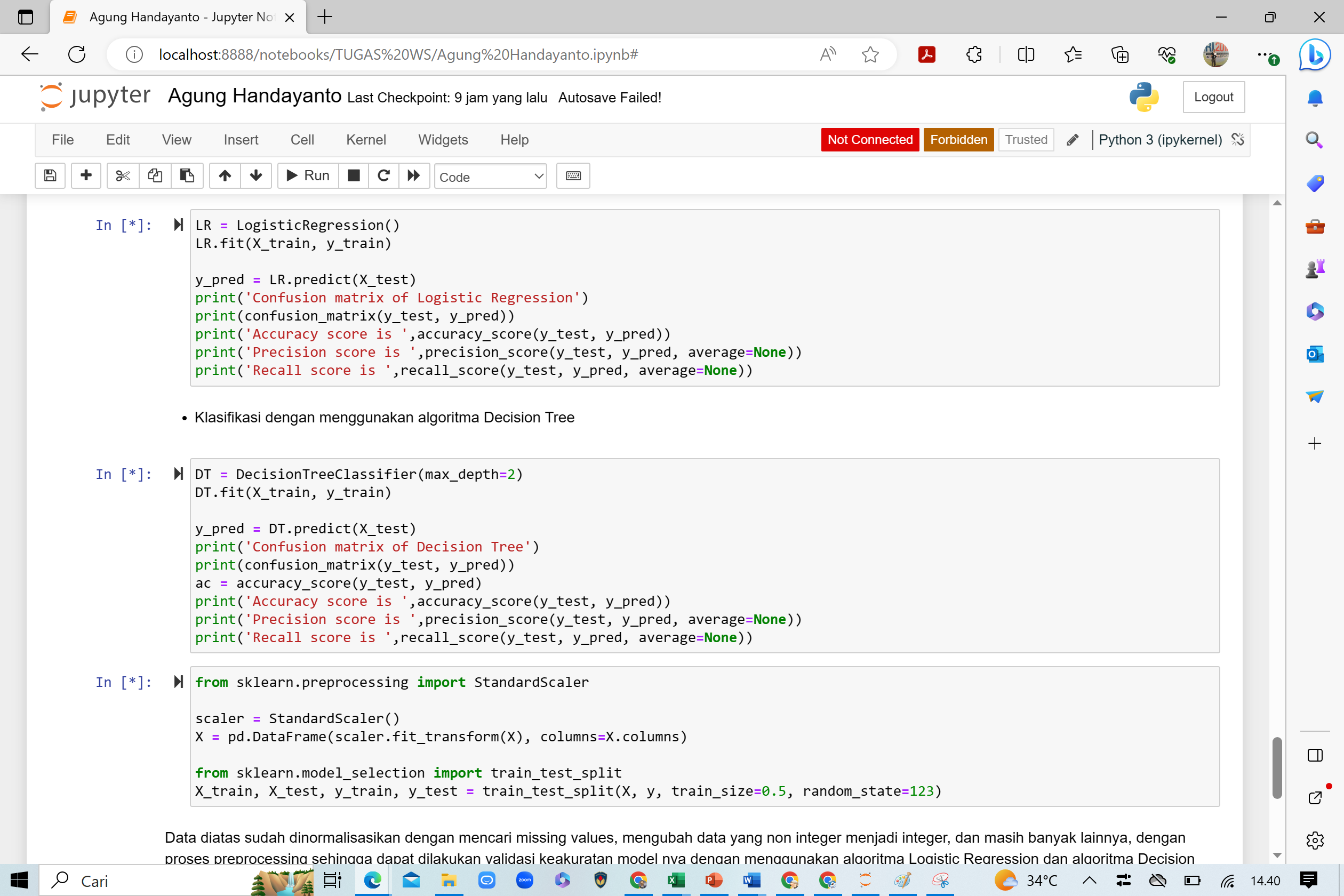

# Data Preprocessing

Data preprocessing is the process of preparing and cleaning raw data before using it for analysis or model training. The steps of data preprocessing can vary depending on the type of data and the analysis goals, but here are the common steps often used:

1. Import Libraries: Begin by importing the necessary libraries or modules to perform data preprocessing. For example, you can use Python and import libraries like Pandas, NumPy, or Scikit-learn.

2. Data Cleaning: The first step is to clean the data by handling missing values, invalid values, or irrelevant noise. You can do the following:

- Remove rows or columns with missing or NaN values.

- Fill missing values with the mean, median, mode, or other appropriate methods.

- Detect and correct invalid or noisy data.

3. Data Integration: If you have multiple data sources that need to be combined, this step involves merging data from various sources into one integrated dataset.

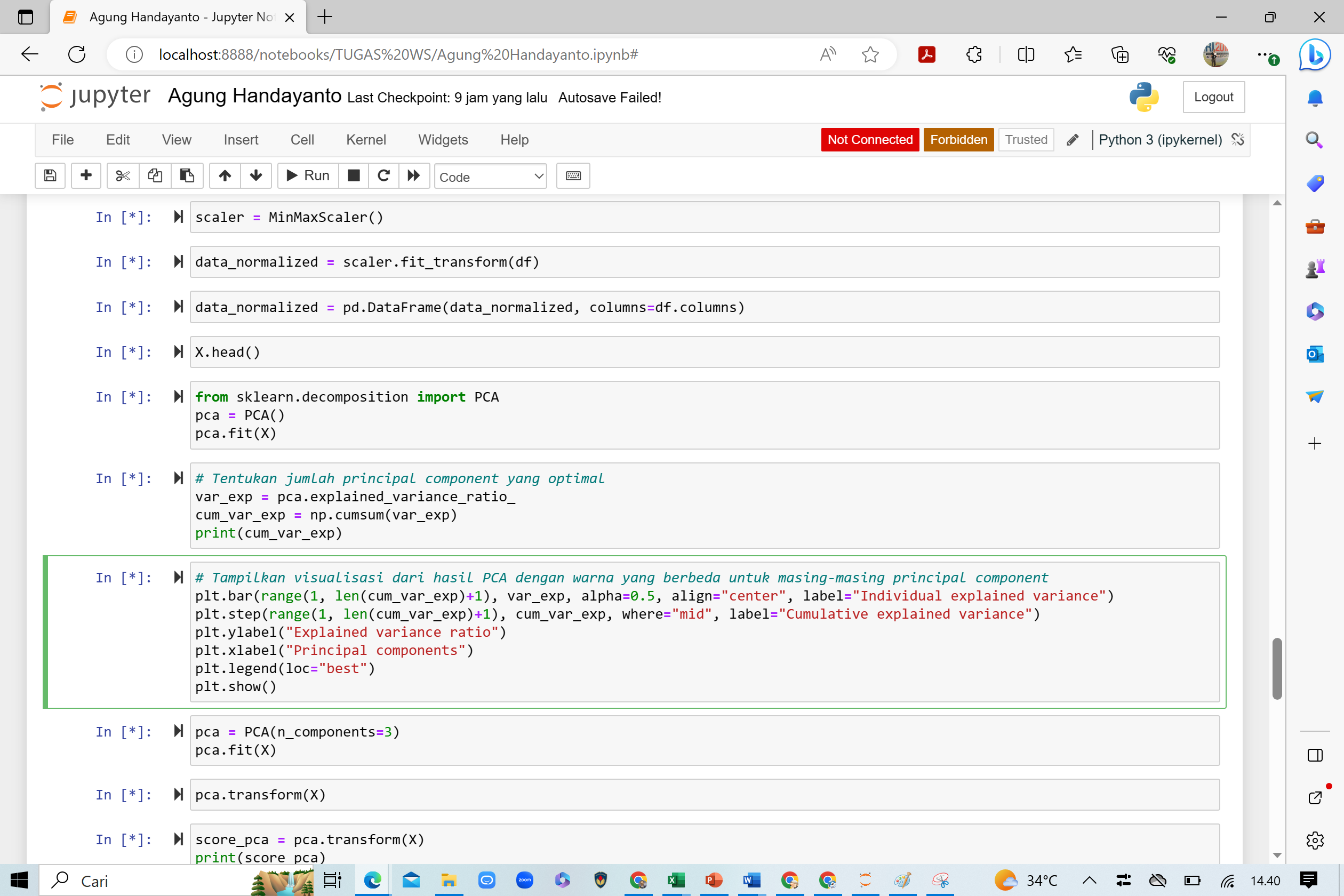

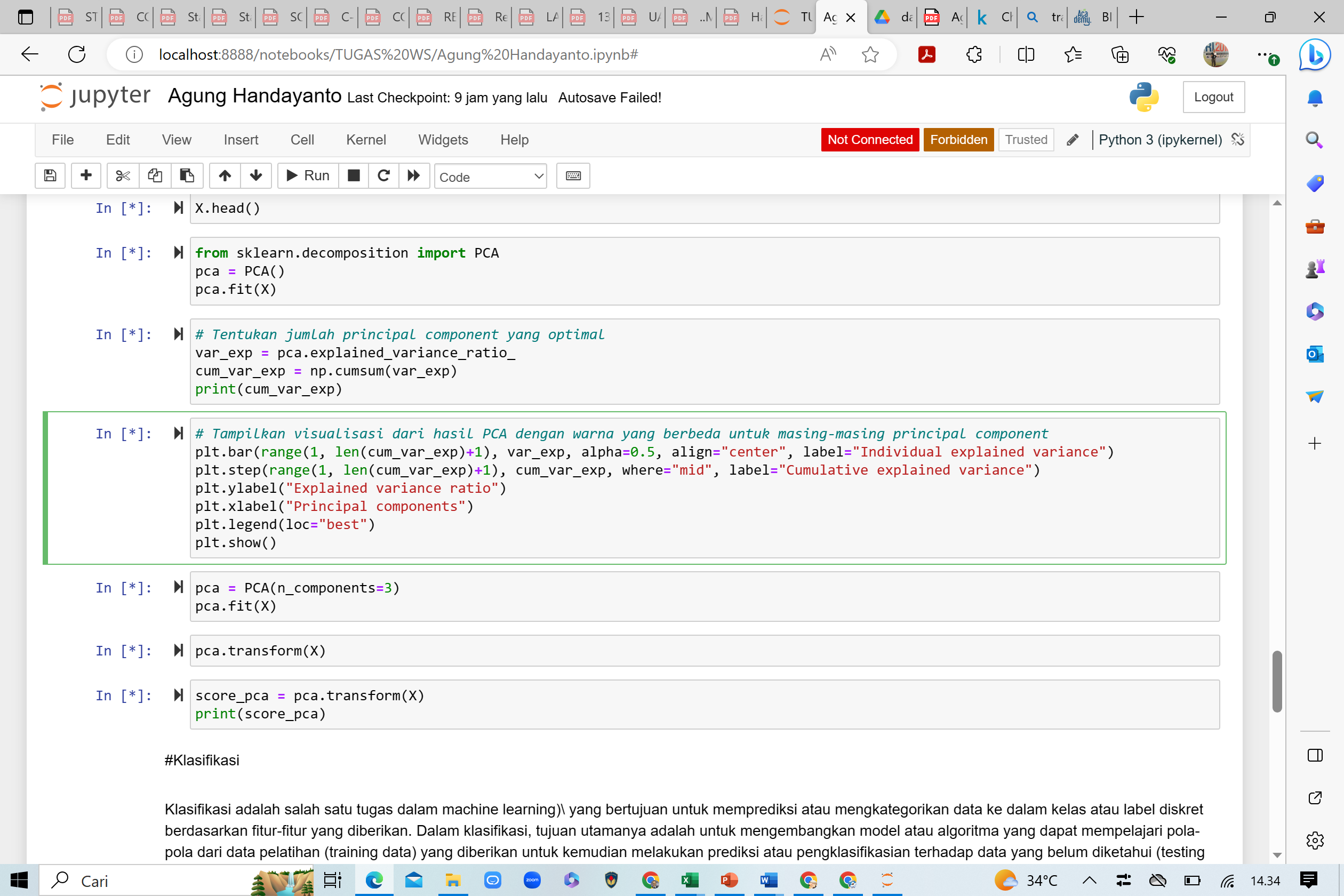

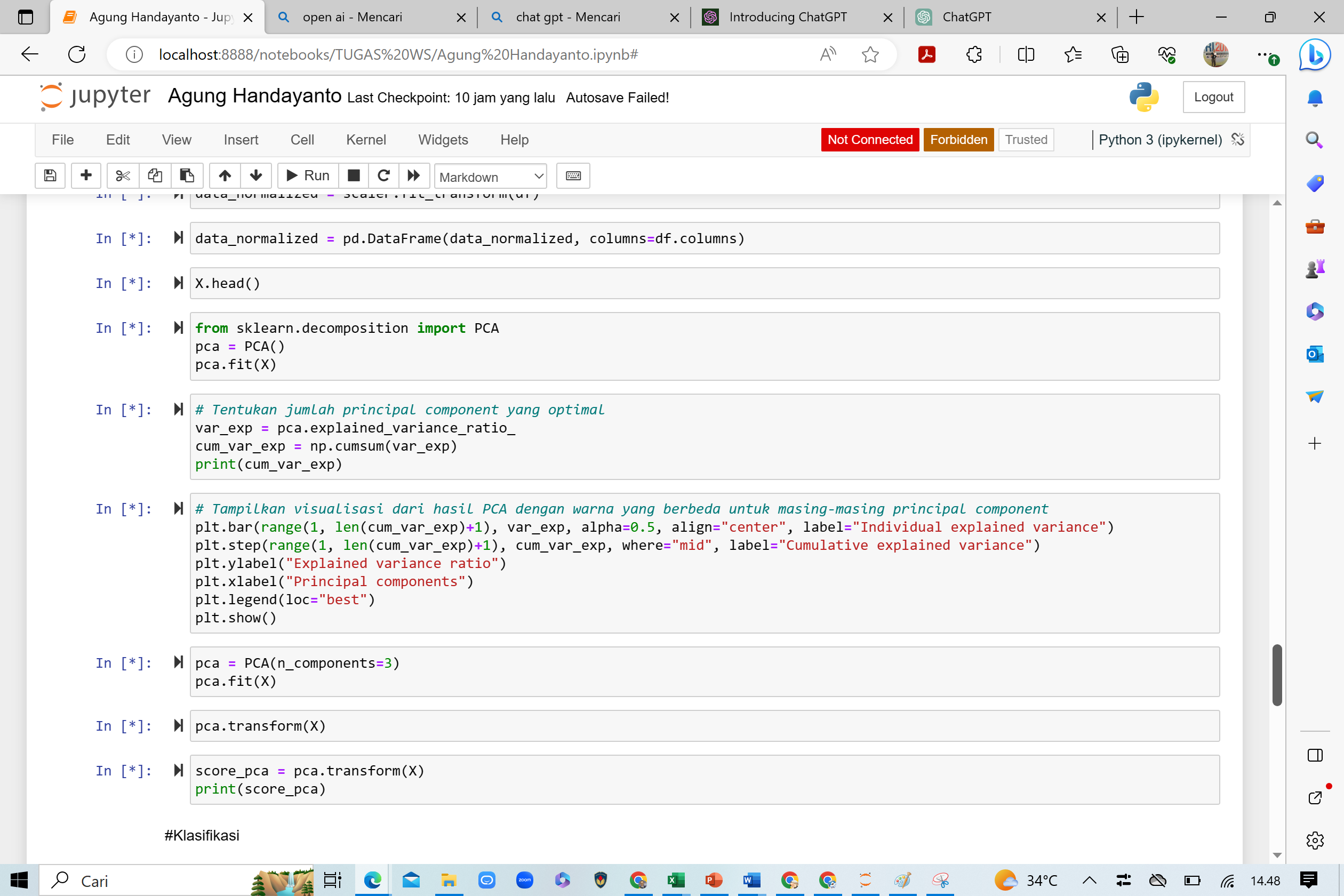

4. Data Transformation: This step involves transforming the data to meet the requirements of the analysis or model to be used. Some common techniques include:

- Normalizing numeric data to scale values uniformly.

- Encoding categorical variables into numerical forms.

- Conducting dimensionality reduction if necessary, such as Principal Component Analysis (PCA) or Feature Selection.

5. Data Reduction: If the dataset is too large or complex, this step involves reducing the number of features or samples to make the analysis more efficient. Common techniques include feature selection and sampling.

6. Data Discretization (Optional): If needed, numeric data can be transformed into discrete forms using techniques like binning or k-means clustering.

7. Data Splitting: This step involves dividing the dataset into training and testing subsets. The training subset is used to train the model, while the testing subset is used to evaluate the model's performance. A common split is 70% for training and 30% for testing.

Informasi Course Terkait

Kategori: Artificial IntelligenceCourse: Persiapan Ujian Sertifikasi Internasional DSBIZ - AIBIZ